ChatGPT vs human: How a S'pore professor could tell which essays were written by a reporter or the chatbot

SINGAPORE — In December, one of my more tech-savvy friends asked if I wanted him to generate a story about me using artificial intelligence (AI). As a writer, I was naturally intrigued, so I agreed.

- ChatGPT has taken the tech world by storm, impressing many users with how it can replicate tasks such as writing code and essays

- TODAY's reporter brushed up three old writing pieces from her school days and fed ChatGPT prompts to produce pieces with similar content

- The six pieces of writing were sent to a creative writing expert to see if he could tell which were written by a human

SINGAPORE — In December, one of my more tech-savvy friends asked if I wanted him to generate a story about me using artificial intelligence (AI). As a writer, I was naturally intrigued, so I agreed.

To my surprise, his AI tool produced a half-decent, though bizarre story that ended with me serving a lifelong prison sentence after he’d put in the prompt “female named Si Yuan lost her iPhone and committed a war crime”.

Although the story lacked depth, it was coherent and understandable while also fulfilling the weird parameters set by my friend; oddly impressive for an AI tool.

This led me down the rabbit hole of Chat Generative Pre-Trained Transformer, or ChatGPT for short, the hottest new AI breakthrough.

It is, in its own words, “a language model developed by OpenAI, designed to generate human-like text based on input prompts”.

As a creative writing student, I was curious if it could produce my schoolwork for me since most of my projects involve writing creative works that can’t just be plucked off the internet.

My editors, too, were curious about the possibilities of this new technology — could it eventually replace our newsroom of humans and make journalists obsolete?

So we put ChatGPT to the test and lined up my old schoolwork against material produced by the AI, to be judged by a professor.

I polished up an old essay, an opinion column and a piece of fiction, and fed ChatGPT prompts to write up three works with similar content to mine.

The six pieces were sent to Associate Professor Barrie Sherwood of Nanyang Technological University’s English programme. He is an expert on creative writing and a published novelist.

Assoc Prof Sherwood was told that three were written by a human and the other three by ChatGPT, and he was asked to determine which was which.

How would I fare against a robot?

Read on to find out what happened.

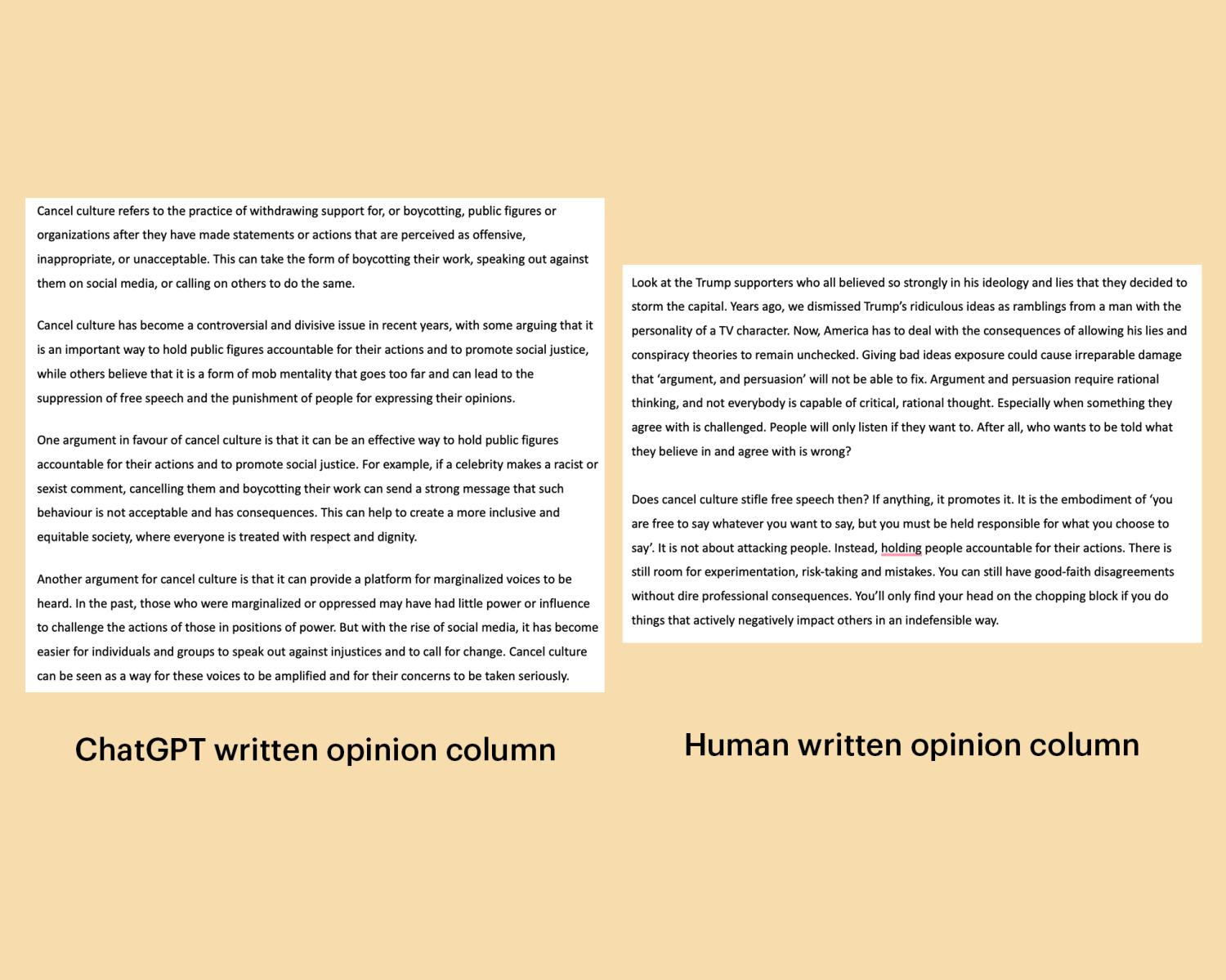

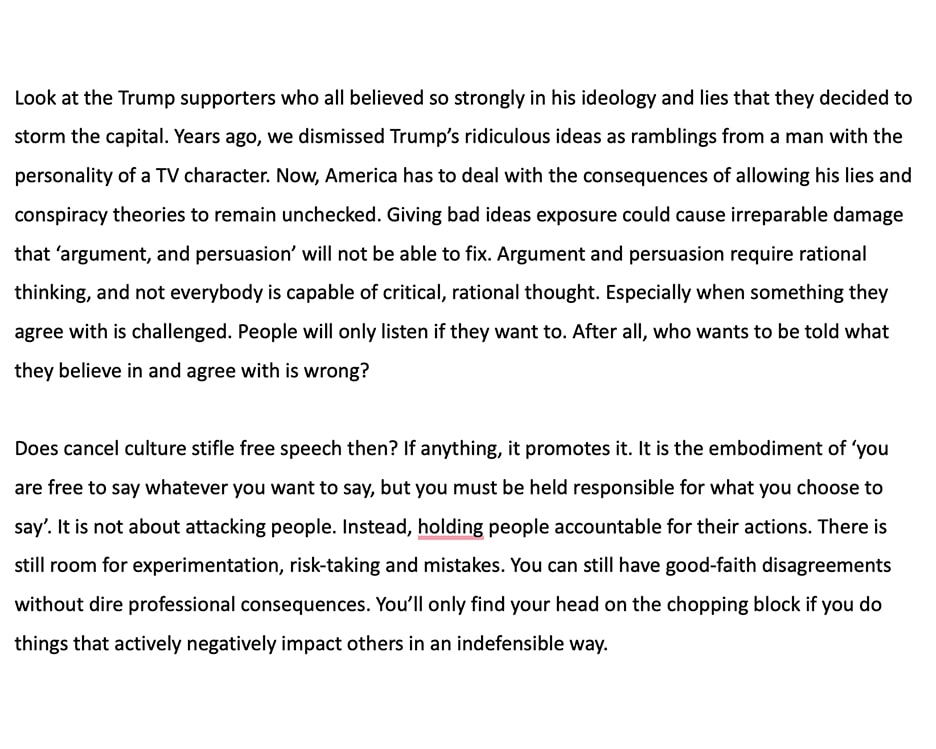

THE OPINION PIECES

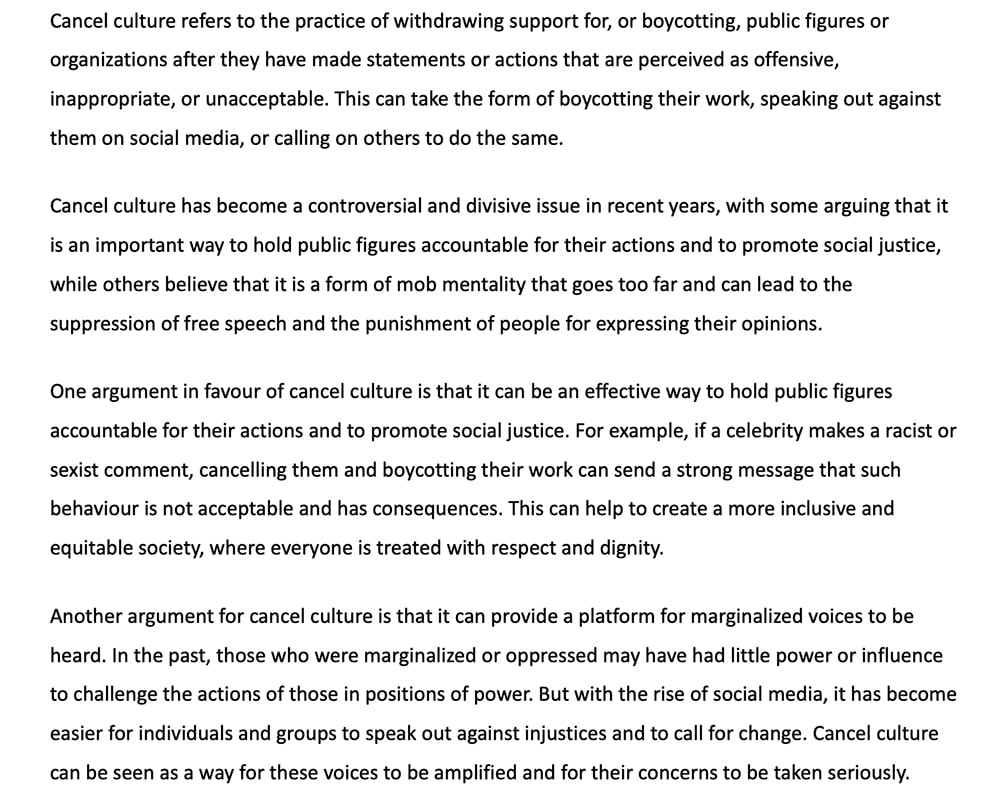

Of the three types of writing, Assoc Prof Sherwood said that it was hardest to make the call on the opinion pieces.

He’d initially assumed that the chatbot’s writing was the one with “lapses in sense and grammar” and misused idioms and metaphors.

However, the lack of errors in the other piece of writing made him suspicious.

He noted that the first opinion piece swung wildly between diction levels, that is, the type of words chosen.

The writer uses the relatively highbrow “boycotted” in one paragraph, then descends to finding herself "getting slammed”. The piece also lacked the structure and clarity of the second piece.

Yet, the second piece had fewer original, less nuanced arguments than the first.

He eventually guessed — correctly — that the first opinion piece was mine and the second by ChatGPT.

It was a tough call given that he was unsure where the AI got its material, he said.

”If I knew it were taking it from newspapers, encyclopaedias and other formalised media, I’d be sure of my decision. But if it were sourcing from online chats and forums, then I’d reverse my decision.”

As much as it hurt slightly to know that my writing was messy and littered with errors, it seems like my human errors set me apart from the bot in this case.

Human 1 : AI Bot 0

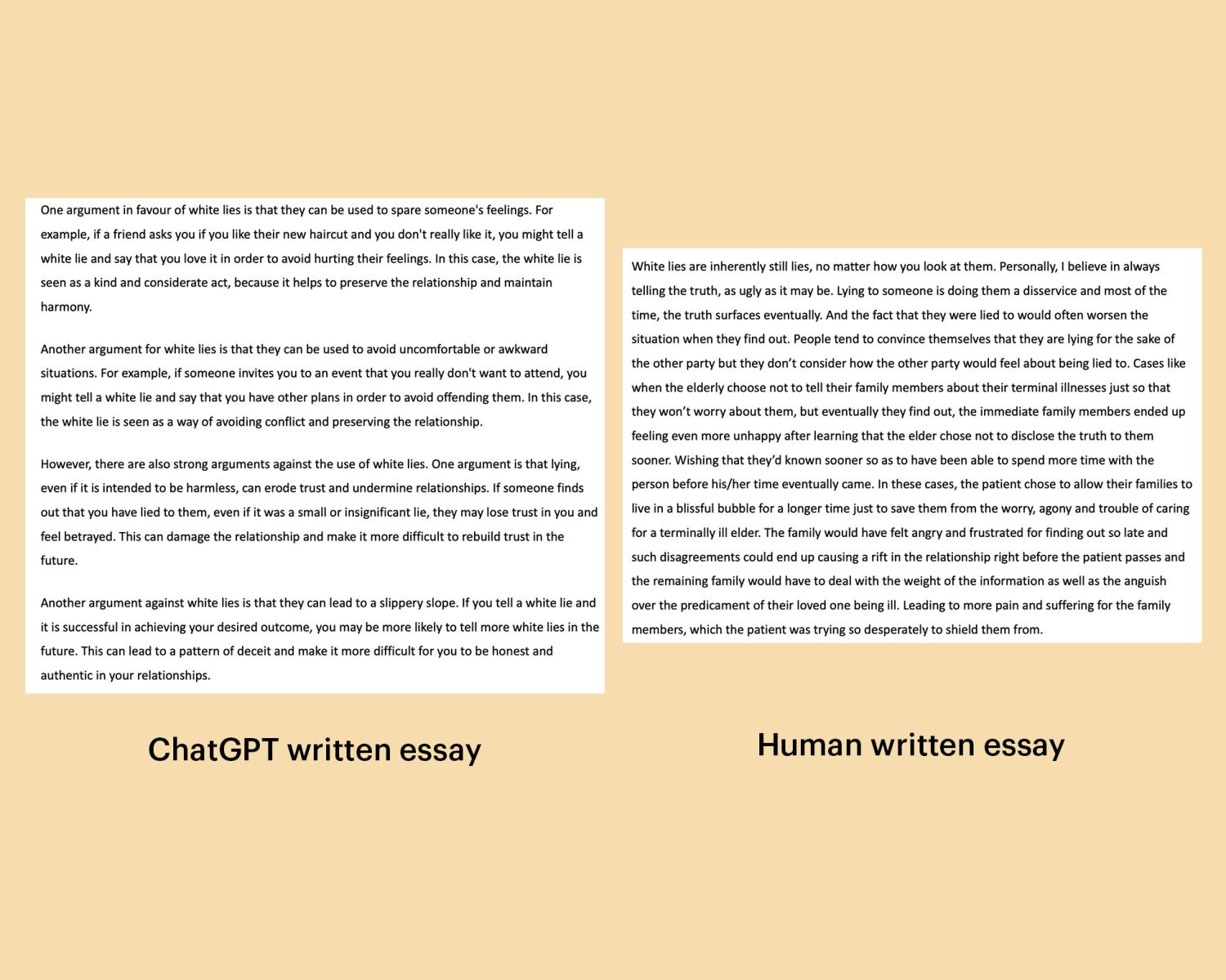

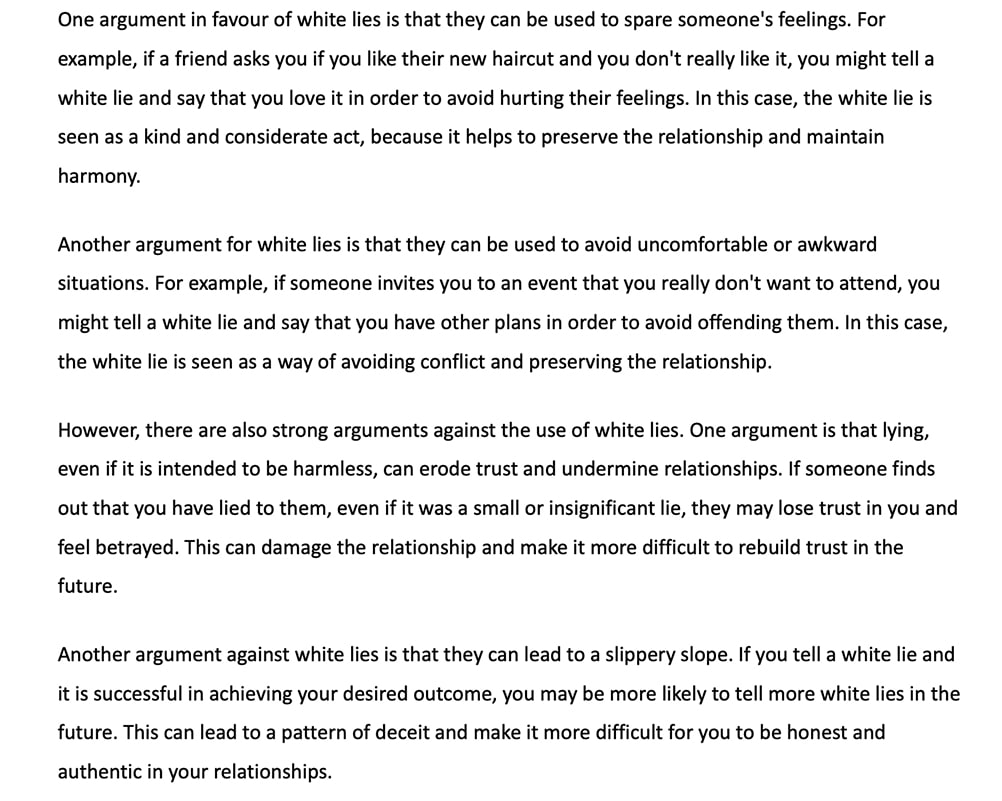

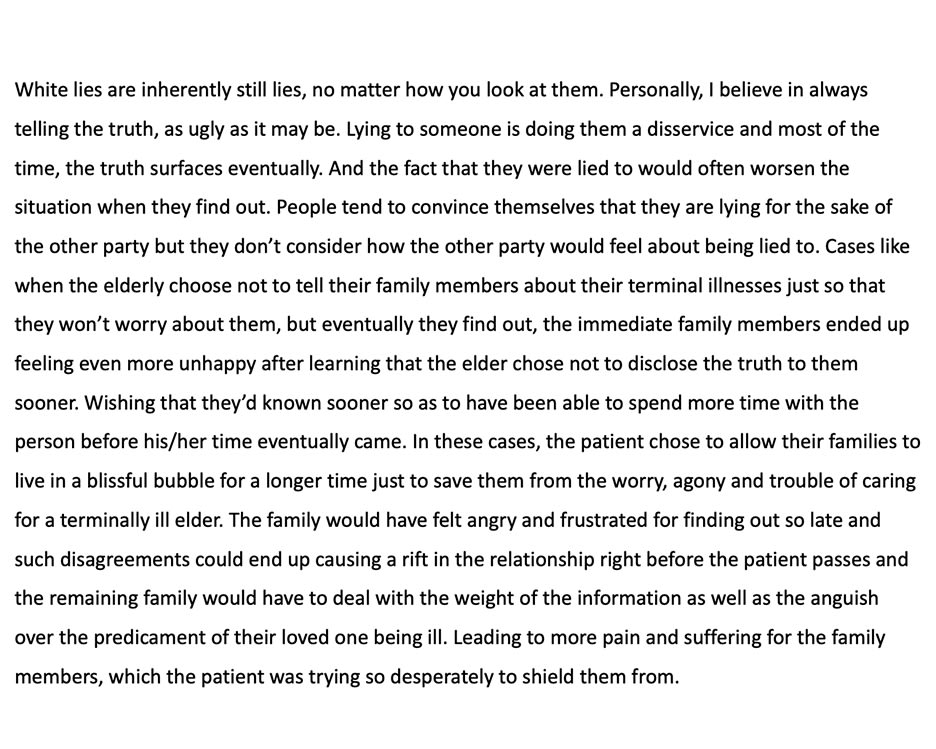

ESSAYS

Assoc Prof Sherwood found similar issues in the essays.

The first was filled with diction fluctuations, too much repetition and other issues. It read “like the first draft of an essay".

This was not entirely wrong since it was a slightly edited version of a school essay I had written when I was 16 years old.

Apparently, my habitual use of metaphors also gave it a human touch, Assoc Prof Sherwood said.

The other essay was “so polished that I think it might have been the one 'written' by the bot”, he said. By now, he was assuming that the bot had been taught good grammar.

So, human error set me apart once again… yay? I was happy to hear that my writing style was distinctive and more human but mildly embarrassed that it was also largely defined by the mistakes I had made.

Still, the AI bot had again been unable to pass itself off as a real person.

Human 2 : AI Bot 0

FICTIONAL WORK

I was confident my piece of fiction would triumph over the AI bot's effort.

The ability to translate emotions through writing and create characters and scenarios that leave the reader feeling for the characters was what I had been trained to produce for three years.

A robot couldn't outdo me in evoking emotions in the reader, surely?

Which was why I was ecstatic when Assoc Prof Sherwood called the bot’s story lifeless. Phew.

He explained that the bot’s story’s characters were “bots themselves!”, simply doing one thing and moving on to another as the story progressed.

It failed to evoke any empathy for the characters throughout the story, consisting of the “raw material of the story” but lacking “the causality that would make for an interesting plot”.

And though my story had some amateurish mistakes, it featured “dialogue, conflict, varying time signatures, some descriptive detail, and characters for whom the reader can actually start to feel”, he said.

“One senses that the writer of the second story knows what constitutes a good story, and is trying to achieve that effect,” he added.

Turns out I did learn something from my three years in school after all!

The final scoreline: A decisive 3-0 win to the humans.

IT'S OKAY TO BE HUMAN

Was I embarrassed that an academic called out my grammar and structure? Sure, but then again, these were all pieces that I wrote when I was 16 or 17.

I'm fairly confident that my writing ability has improved slightly since then.

It is comforting to know that as “smart” as AI has become, it still feels artificial at its core. But don’t quote me on that when robots become our overlords in 10 years. Or not.